Continuous Integration and Continuous Delivery (commonly referred to as CI and CD respectively, or CI/CD for short) are concepts that allow software engineers to release value to customers much more frequently and with more confidence.

To understand why and how, we are first going to depict a world without CI/CD.

Why bother? A world without CI/CD

Meet Reto, DevOps Engineer at DevOps Ready. Reto has been working on a new feature for the past four weeks: it seems to work fine on his local machine, and he would like to make it available to customers. The release process at DevOps Ready is defined as follows:

- Make the new feature available on a test server.

- Have another employee check that new feature for Quality Assurance.

- In the past, some releases have caused problems to customers. To ensure quality, there is now a list of important checks to make for each application before every release in the wiki.

- Once the Quality Assurance has validated the feature, make the new feature available to customers.

- If an issue occurs, revert the feature as soon as possible.

Reto's department is taking care of a few web applications, so for him and his team, "make the new feature available" means deploying the application via ftp to the servers. Reverting the changes also means restoring the last working version to the server.

Problems with this approach

We are going to split the issues into two groups: Quality Assurance and Deployment.

Quality Assurance

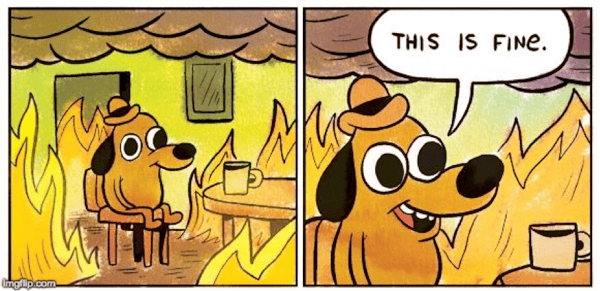

Reto has been working on a new feature for the past four weeks: it seems to work fine on his local machine.

Reto can easily test the feature he is working on, but can he be sure that the application works as a whole?

He could click through every page of the site manually, if the site is small enough, and check that "everything looks good".

What if the application codebase is made of tens of thousands of lines of code? How do we know that our change is not breaking the application somewhere?

To ensure quality, there is now a list of important checks to make for each application before every release in the wiki.

Who maintains that list? Who updates it and when do we do so? How do we guarantee that anyone actually ran the checks?

What if the list contains 30+ points to check? We always have to check the same points over and over again, release after release.

Other thoughts

Being chosen for that kind of QA must be felt as a major pain ... If there is a minor bug in Reto's feature, he would have to go through the whole process again!

Also, it is likely that the QA list will grow bigger as the code gets bigger ...

Deployment

Make the new feature available on a test server.

This process will be the same for every release: checkout the code, put it in a specific folder, apply database changes, restart the web server.

A list of steps should be maintained somewhere visible to all engineers (especially useful for new hires), which is cumbersome and subject to being outdated soon.

Also, what is to stop me doing some manual step that I don't record in the list, but which is needed, and next time I deploy I of course forget that step and screw up the deployment?

Once the Quality Assurance has validated the feature, make the new feature available to customers.

This is actually the same process as above: releasing to a test server or a server visible to customer should be exactly the same procedure.

If an issue occurs, revert the feature as soon as possible.

Reverting code also should be the exact same action every time, regardless of the feature/bugfix we are trying to remove.

What to do? Automate all the things!

DevOps principles tell us that our feedback cycles should be short. In DevOps Ready, it is hard to release features often and with confidence: it is likely to be one or the other.

Thankfully, some automation principles can help with most of the concerns raised in the previous sections.

Automated testing

All code is guilty until proven innocent. - Anonymous

Manual tests are tests conducted by humans on an application.

Automated tests are tests conducted by a computer on an application.

Both verify that your application works as it should, but automated tests have a significant advantage: you can verify that an application works any number of times, without any human interaction or effort: computers never get bored! Testing manually that the whole application works would require a significant effort on the tester's side, every time a new release is made.

Automated tests make it easier to know if a feature will continue to work as it should in a year from now.

Now, one question remains: when are you supposed to run these tests? Developers could (and should) run them while developing, but what if they forget?

As we are about to see, Continuous Integration provides an answer to the question at hand.

Continuous Integration

Continuous Integration ensures that the feature/bugfix an engineer has written does not break the rest of the application (provided of course that some automated tests are present for the application). In other words, it checks that the new piece of code "integrates well" with the existing application.

Every time new code is coming to the application (usually in a Pull/Merge request), we ask the continuous integration server to ensure that the app is not broken in any way. If it is broken, we do not allow that code to be merged to the master branch. Also, if we later on notice that the merged feature still is problematic, it will not be allowed to be deployed.

Some CI solutions: TravisCI, CircleCI, Gitlab, ...

Continuous Delivery

Thanks to CI, you now have the confidence that your application is working the way you expect it to, but how do you show this application to your customers? Also, if something does go wrong in production, do you have a way to revert those changes quickly enough?

Enter Continuous Delivery.

The outcome here is simple: we should be able to deploy an application to an environment (staging/production) in at most one action, be it a git push, a click, a slack message to a bot, a tool in the command line ... Several tools are available for that purpose.

For deploying code to a host, Capistrano is quite a standard (at least within the ruby community).

If you use containers and a container Orchestration system (Kubernetes, Openshift, ...), the deployment process is already streamlined for you by default.

Some CI/CD solutions: Codeship, Gitlab, Kubernetes, Openshift ...

Summary

According to the Puppet State of DevOps Report 2017, high-performing teams:

- perform 46x more frequent code deployments than lower-performing teams .

- have 440x faster lead time from commit to deploy than lower-performing teams .

- have 96x faster mean time to recover from downtime than lower-performing teams .

- have 5x lower change failure rate .

CI/CD are one of the DevOps best practices that makes such high-performing teams.

To recap: Automated testing is the best way to ensure that your application behaves the way to want it to.

Continuous Integration helps us know that the app is not broken as a whole, and in particular that a new feature will not break the existing codebase.

Continuous Delivery helps us release value to customers frequently.

What’s next?

Part 2 of this DevOps CI/CD series will focus on setting up a real-life CI/CD pipeline: stay tuned!

Docker is a tool that supports the DevOps approach. In this video presentation of our TechTalkThursday in March, David talked about «Docker for Developers» and used the nine Status app as an example for the transition to a Docker setup in detail.